The Ongoing Computer-Internet Revolution

Note: Like the above posts, this is simply my own interpretations and opinions. It might seem like a lot of information, but the links are only there for reference and/or further exploration. Their inclusion is not necessarily an endorsement of all of their contents, although I did try to stick with free resources, things of historical significance, and items that seemed helpful in some way. If you find something useful, please consider making a backup of it.

Contents

• A General Sociological Framework

• Realigning Our Systems With A Humanistic Purpose

• The Transformation of Subcultures

• Countercultural Expansion

• Conclusion

A General Sociological Framework

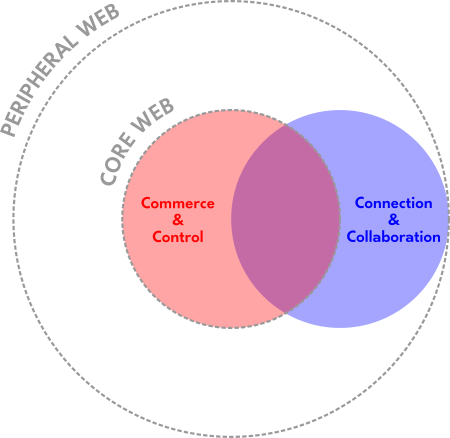

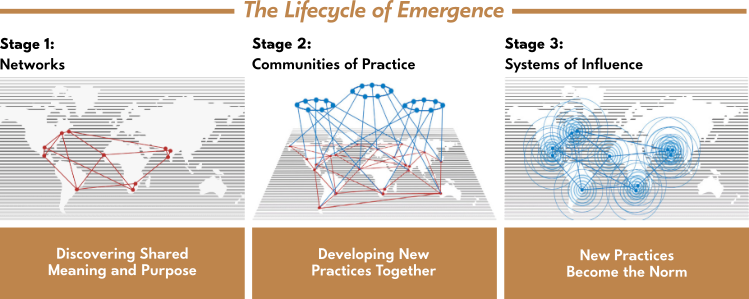

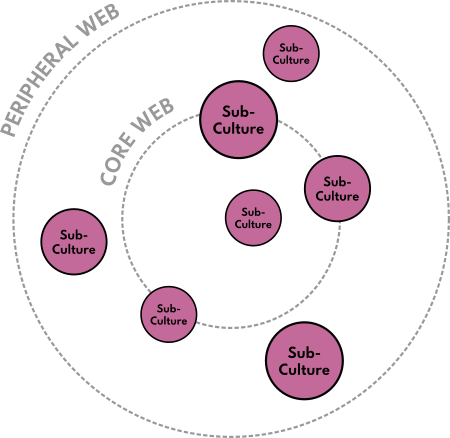

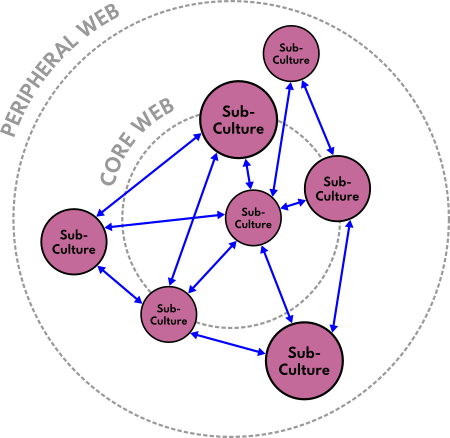

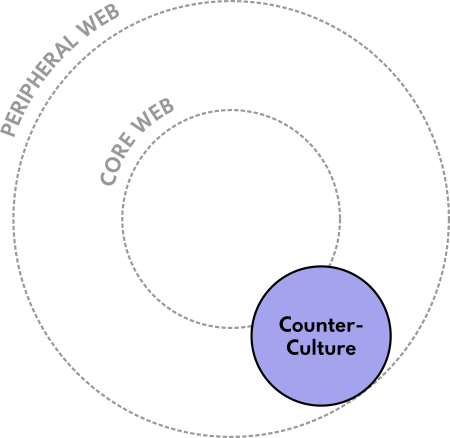

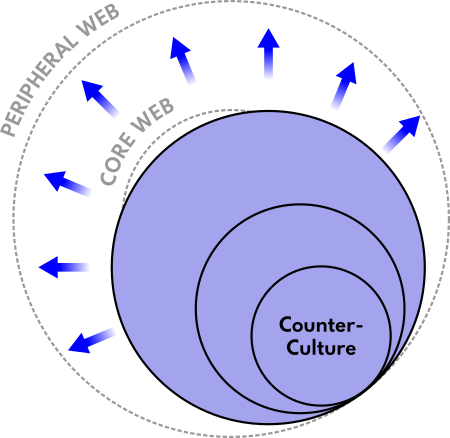

Within the Yesterweb Summary, the terms Core Web and Peripheral Web are defined. We will visualize that relationship as two concentric circles, shown here with dotted lines:

Inside of them are two more overlapping circles forming three colored areas (in red, blue, and purple). These areas are a simplified representation of the motives of everyone using both the Core Web and the Peripheral Web. [This aspect of the diagram is based off of @oistepanka 's concept of "Red", "Blue", and "Purple" online spaces.] Again, the Core Web is defined by systems that seem to be predominantly focused upon Commerce and Control, while the Peripheral Web has many areas that emphasize genuine Connection and Collaboration. The two can overlap quite a bit though because people are diverse.

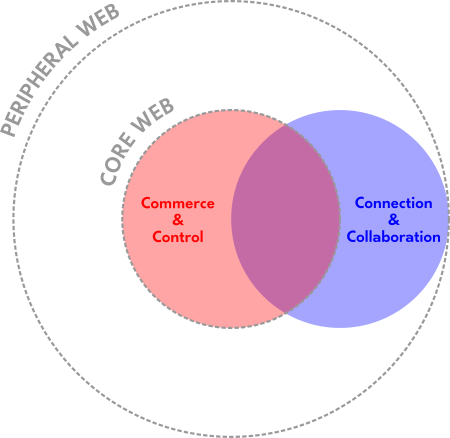

To paraphrase the Summary, the movement of an individual from the Core Web to the Peripheral Web is often based upon the amount of awareness that they have in regards to the history and structure of the Internet, how various online "platforms" and "services" currently operate, and so on. That understanding can influence what they perceive to be the source of problems at each level of scale and how they attempt to solve them. Those approaches are generalized within the Summary as either "Regressive" or "Progressive" in nature. To summarize all of this information in table form:

Some might interpret such language as divisive, but we are not trying to alienate anyone here. People's experiences and intentions are not necessarily rigid or simplistic either. However, if I were trying to pinpoint the defining characteristic of a "Regressive Stance", it would seem to be the tendency to operate with prejudice and blame, whereas a Progressive Stance would take responsibility for one's part within a system that is destructive with the hope of changing it for the better.

How can we help to facilitate the transformation of a Regressive Stance into a Progressive one across all levels of scale (represented by the two green arrows on the above table)? In other words, how can we engage individuals and groups in constructive ways until all systems are wholly transformed from both within and without for everyone's benefit as much as possible?

One can assist this process by sincerely attempting to live out the Manifesto and implementing the Social Etiquette within one's own behavior both online and off. Let's explore some other ideas that might help.

Realigning Our Systems With A Humanistic Purpose

We can learn a lot about the current state of computing and the Internet by going into a bit of the history behind how this situation has developed. It can also help us to determine constructive actions that we can take right now to help unfold its potential. The following will cover a few broad strokes, spanning from the late 1950's to the early 2000's, and will be biased towards events that have happened within the United States. I will try to keep it "non-technical" so that anyone can read it. If a part does not make sense, please skim over it and return to it later...

To "compute" is to do math. The history of computing is ancient, but the electronic devices that we normally associate with the activity only started to become a worldwide phenomenon within the 1950's due to an increased understanding of how to produce a "transistor". A transistor is a component that acts like a tiny switch. By turning it off and on, we can control the flow of electricity within a circuit. If we combine enough of them together into logical patterns, we can make electrical circuits that can carry out all sorts of different tasks. Transistors are made out of a special type of material called a "semiconductor". This material can be used to form a whole set of transistors as a single piece. This is called an "integrated circuit", and it is the idea that lies at the basis of what most people now know as a "computer chip".

The computer chip led to the creation of the general-purpose "minicomputer" throughout the 1960's. Before this time, most computers were gigantic in size, incredibly expensive to build, finicky to operate, and used for specialized applications, like scientific research related to the military.

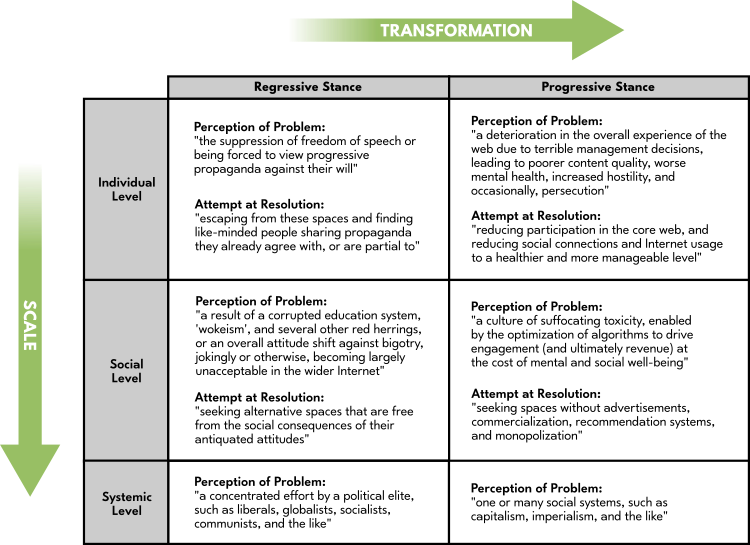

Thankfully, many of the people involved in the development of computers wanted to apply them towards helping humanity rather than destroying it. As early as 1945, the engineer Vannevar Bush encouraged scientists to use this kind of technology for peaceful purposes. Towards this end, he designed an electromechnical device intended to aid one's memory by organizing information. He called it a Memex. It had projector screens that would display documents from rolls of microfilm, and one could write personal notes on them or make links between different parts.

[Image from Jörg Rädler]

Inspired by this work, as well as that of several others, the researcher Douglas Engelbart put together the fantastic 1962 paper, Augmenting Human Intellect: A Conceptual Framework. The general premise of this document is that we, as in humanity as a whole, will eventually need to formulate new ways to solve complex problems together. Our survival literally depends upon it. The various ways that we can extend or "augment" our capabilities was distilled into the acronym, H-LAM/T. This stands for: "Humans using Languages, Artifacts, and Methodologies, in which they are Trained".

We don't need to know all of the details behind how human intelligence "works" to meaningfully augment it. And further, computers could be tools (or "artifacts") that aid that process. Doug conjectured that this could "feedback" or build upon itself so that teamwork becomes exponentially better over time. To put it another way, the "Collective IQ" of a group can start to rapidly increase when the things that are made together help everyone to work together more effectively. [This is similar to the concept of Moore's Law, which describes how computer chips get faster as manufacturing methods become more refined.]

Doug worked at the Stanford Research Institute (SRI) in Menlo Park, California. He led a division called the Augmentation Research Center (ARC) that attempted to test out this hypothesis. By 1968, the ARC team had created what they called the oN-Line System. They gave a demonstration of it at the Joint Computer Conference in San Francisco (a little over 30 miles away from Menlo Park). Later dubbed "The Mother of All Demos", many of the features of modern computing were presented for the first time in an already highly developed form, everything from using a mouse for navigation to interacting with far-away people through video conferencing. This is absolutely incredible considering the state of computers at the time. But what is even more important is that all of this was specifically created as a set of collaborative tools to help increase everyone's intelligence! It was both self-empowering and deeply unifying.

Unfortunately, much of the work and the design philosophy behind it remained obscure. To remedy that, Doug spent the rest of his life teaching others how to apply the same method that the ARC team used when creating the oN-Line System. He showed how we could structure organizations for the purpose of trying to solve world problems. I will attempt to give a short personal summary of it here...

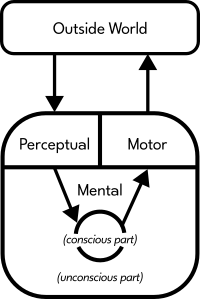

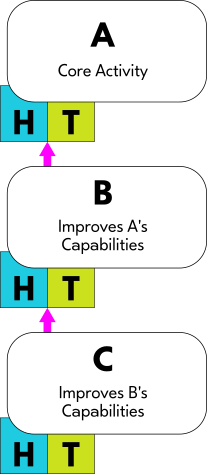

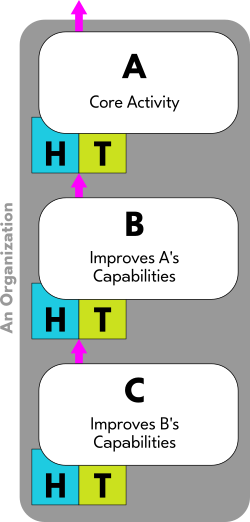

This is a highly simplified model of the basic capabilities of a human being:

We perceive the outside world through our senses, our "perceptual system". We transform the data that we receive through our senses with our "mental abilities". We may not be aware of everything, so it has both "conscious" and "unconscious" aspects. We express action through our "motor functions" (i.e.: anything that causes movement of the body, like the muscles). In turn, this affects the outside world. One can follow the black arrows within the above diagram to get an overall idea of this relationship.

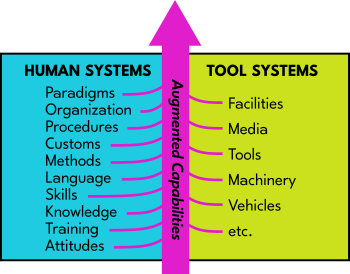

We also make "human systems" and "tool systems" to extend our capabilities. Generally, human systems are made up of concepts, while tool systems are made up of physical objects. Together, they form what Doug calls an "Augmentation System":

In some ways, the growth of our tool systems is currently outpacing the growth of our human systems to the point that it is causing us problems (e.g.: social disintegration, ecological damage, etc.). To stay balanced, human systems and tool systems have to "co-evolve", or develop in complement to one another. This co-evolution is represented within the above diagram by a magenta arrow.

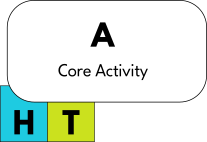

We can make a simplified representation of the Augmentation System by using a rectangle divided into two halves, each half containing only an "H" and a "T":

We will symbolize the main activity of an organization, what it "does", as a box labelled "A":

Underlying this activity is an Augmentation System (i.e.: there are human systems and tool systems that are involved all throughout it). We will represent this as a small HT box to the bottom-left of A:

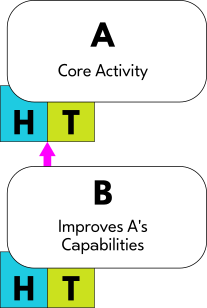

Whenever we attempt to make that activity more effective, we are actually doing another kind of activity that we will call "B". B activity increases the capabilities of A by altering its Augmentation System in some way. We will symbolize this as a magenta arrow reaching from B into A through its Augmentation System in the following manner:

Similarly, if we try to become more effective at increasing effectiveness itself, then we are doing yet another kind of activity. We will call that type of activity "C", and it too impacts B through its Augmentation System. Again, we will use a magenta arrow to symbolize this:

To use an analogy to help clarify: If we think of A as some kind of skill that we want to get better in, B would be the knowledge that we need to do that, and C would be like learning how to learn. Notice how each of these augments the one that comes before it in some way. C makes B easier, while B makes A easier.

All together, this model is called "The ABCs of Organizational Improvement":

The ABCs of Organizational Improvement give a general idea of how to make a group more effective at whatever it does. However, it is imperative that we make all of these activities constructive for everyone!

Not all organizations have B and C-types of activities, but if they do exist, it is important to become aware of them. If they don't exist, then we need to figure out how to create them. Only then can we implement what Doug calls a "Bootstrapping Strategy". In this context, to "bootstrap" means to direct the activities of an organization towards something that will raise its Collective IQ as rapidly as possible. Two things are needed to facilitate this process:

1. Each aspect of the organization needs to continuously combine the information that it gathers with the records of what has already been done, so that new knowledge can be created and applied. Doug refers to this as Concurrent Development, Integration, and Application of Knowledge (or CoDIAK, for short). The materials that are compiled as a result of this process are known as a Dynamic Knowledge Repository (or DKR).

2. There has to be a communications system in place that allows information to be easily shared and collaboratively worked upon across the whole organization. Doug calls this an Open Hyperdocument System (OHS). We won't get into the technical details behind this system, but we can think of the term "hyperdocument" as equivalent to "webpage". This just means that interconnections can be made between all of the different types of materials within the DKR, no matter what form they take (whether it be text, audio, video, etc.). It is essentially a computer through which information is easily accessible.

To put it succinctly, we are attempting to use technology to increase Connection and Collaboration within a group of people as much as possible.

This entire method is scalable. When multiple organizations start to work together by sharing B and C-type strategies, they become known as a Networked Improvement Community (or NIC). [I have touched upon the concept of NICs before.] Each organization could have A-type activities that are distinct from one another, but as a whole, they should function in complement.

It is a helpful framework for structuring communities so that the people both inside and outside of them can use technology to work together more effectively. However, we are still only scratching the surface of what was happening within the early history of computing and the Internet. Let's go back and look at a few other events that were occuring in parallel...

During the 1960's, some academic institutions had computers, but access was often limited to professors and other researchers. One reason for this was because the computers were complicated to control. It required specialist knowledge in order to write the instructions necessary to run them. These sets of instructions are known as "computer programs" (or, more generally, as "software"). The act of writing software is known as "programming" or "coding". It is done with a "programming language".

John Kemeny and Thomas Kurtz were two professors on the mathematics department at Dartmouth College in Hanover, New Hampshire. They attempted to simplify programming by creating the Beginner's All-purpose Symbolic Instruction Code (or BASIC) in 1963. This programming language helped many students without a scientific background learn how to program computers.

Another reason why access to computers was limited was because of time. Since there was usually only one large computer within each organization, if several people wanted to use it, they had to wait for their turn. This problem was solved by splitting up the different tasks into smaller procedures, and then cycling through each of those in quick succession. If the computer was relatively fast, multiple people could use the same computer at what seemed like the exact same time. Therefore, this approach was called "time-sharing".

Again, John Kemeny and Thomas Kurtz implemented one of the first time-sharing computer systems in existence at Dartmouth. This was done upon the recommendation of a colleague at the Massachusetts Institute of Technology (MIT), located within the city of Cambridge, Massachusetts. To quote:

Kurtz approached Kemeny in either 1961 or 1962, with the following proposal: all Dartmouth students would have access to computing, it should be free and open-access, and this could be accomplished by creating a time-sharing system (which Kurtz had learned about from colleague John McCarthy at MIT, who suggested "why don't you guys do time-sharing?")...

In practice, each person was connected to a single computer through their own "terminal", a device which could send and receive data to and from that computer. At first, these terminals were "teletypes", a kind of electric typewriter that would print out what was happening. They were eventually replaced with "video display units", television-style screens with a keyboard attached to them.

Time-sharing created a method for multiple people to work on complex projects together simultaneously. This would have a big impact on society in the coming decades...

There were many Countercultural movements within the 1960's, each of which addressed various social issues that are still important (like civil rights, sex/gender equality, world peace, and environmentalism). Thanks to groups like Students for a Democratic Society (SDS), many college-aged youth started to explore the concept of "participatory democracy" and noted the importance of open communication within this process. [A wonderful description of how computers can interface with these concerns is given within the article Participatory Democracy From the 1960s and SDS into the Future Online by Michael Hauben (from Vol. 11, No. 1 of The Amateur Computerist magazine).] We will highlight a few examples here and try to provide some context for them.

Lee Felsenstein was an electrical engineering student at UC Berkeley, a college in the city of Berkeley, about 13 miles from San Francisco. He was part of the Free Speech Movement protests that took place there in 1964. To quote [*including all of the extra explanatory links of the original]:

With the participation of thousands of students, the Free Speech Movement was the first mass act of civil disobedience on an American college campus in the 1960s. Students insisted that the university administration lift the ban of on-campus political activities and acknowledge the students' right to free speech and academic freedom. The Free Speech Movement was influenced by the New Left, and was also related to the Civil Rights Movement and the Anti-Vietnam War Movement. To this day, the Movement's legacy continues to shape American political dialogue both on college campuses and in broader society, influencing some political views and values of college students and the general public.

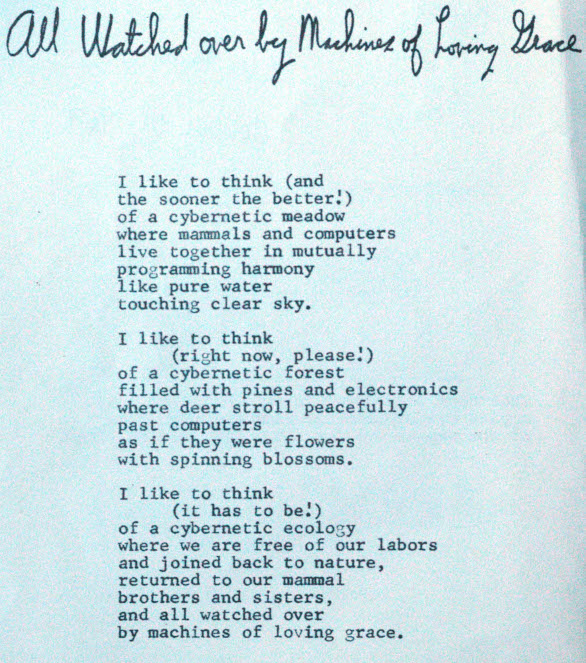

Lee was also deeply impacted by these events. He started writing for an underground newspaper called The Berkeley Barb [Content Warning]. His desire for change was expressed throughout his work involving computers as well. There was both a hopefulness about what computers could help humanity to do, and an exasperation at what they had already been used for, such as facilitating war. This is captured beautifully within the 1967 poem, All Watched Over by Machines of Loving Grace by Richard Brautigan:

[Image from Alexander Rose of The Long Now. Notice the theme of automation helping us to be free from toil so that we can focus on getting along peacefully with Nature and each other. Of course, it does not require any special tools to honestly try to get along with one another or our environment. Ultimately, it is the values that we choose to act upon that determine the type of technology that we create and how we use it, not the other way around.]

Lee started a personal engineering practice named after this poem. It was called Loving Grace Cybernetics (or LGC Engineering, for short). It is interesting to note that the poem was first published by The Communications Company located within the Haight-Ashbury neighborhood of San Francisco. This area was a hotspot for Countercultural activities, especially those associated with a group of performers / activists named The Diggers, of which The Communications Company was a part. It is worth exploring their history, philosophy, and practices, especially the work of Gerrard Winstanley that serves as their inspiration. To quote [*with an extra explanatory link added by me]:

Another well-known neighborhood presence was the Diggers, a local "community anarchist" group known for its street theater, formed in the mid to late 1960s. One well known member of the group was Peter Coyote. The Diggers believed in a free society and the good in human nature. To express their belief, they established a free store, gave out free meals daily, and built a free medical clinic, which was the first of its kind, all of which relied on volunteers and donations. The Diggers were strongly opposed to a capitalistic society; they felt that by eliminating the need for money, people would be free to examine their own personal values, which would provoke people to change the way they lived to better suit their character, and thus lead a happier life.

Around this same time, some inner city groups carried out similar projects (e.g.: the Free Breakfast for School Children Program of The Black Panther Party in Oakland, The People's Church of The Young Lords Organization in Chicago, and so on). Inequality created severe poverty within their neighborhoods and they tried to fix it from within. "Hippie" communes also started to crop up all throughout the countryside as many people attempted to flee "The Establishment", test out new social structures, and live by a "back-to-the-land" philosophy. There was generally a lot of fear around how radically different people seemed to be acting in comparison to the past few generations.

In the late 1960's, Stuart Brand went on a roadtrip to several of these types of communities. Stuart was actually a biologist / artist that helped to film "The Mother of All Demos". He had connections to collectives of artists that were dabbling in the creation of audio-visual experiences (or "multimedia"). This included USCO in New York and The Merry Pranksters in Oregon. Stuart and his wife, Lois, eventually settled down in Menlo Park, California. They converted their truck into The Whole Earth Truck Store and sold homesteading equipment.

They released the first Whole Earth Catalog in 1968 to share all of the knowledge that they had accumulated throughout their trip, and to promote some of the tools available at their small shop. This magazine was published by The Portola Institute and jam-packed with information, all of it presented in a unique style. There was no rigid layout. Blocks of text floated freely among photos and diagrams. Both the content and the presentation left a deep impression on many who read it.

This focus on self-sufficiency coincided with the creation of many "food conspiracies" around that same area. To quote:

Food Conspiracy is a term applied to a movement begun in the San Francisco Bay Area in 1968 in which households pooled their resources to buy food in bulk from farmers and small wholesalers and distribute it cheaply. The name came to describe a loose network of autonomous collectives which shared common values and, in many cases, suppliers. Many participants were seeking an alternative to supermarkets and became involved to obtain direct control of the quality and type of food they were sourcing, with a strong focus on wholefoods and organic produce.

The adoption of the name 'food conspiracy' has been described by a participant as a "response to the Nixon-Agnew rhetoric of the time (which) had us all as communist conspirators against the state, the war and public morality."

By the mid-1970's, some of these food conspiracies transformed into a collective called the People's Food System. To quote:

In the 1970s, collaborative "food conspiracies" began to recede and food activists founded a network of worker-owned cooperative stores and businesses that became known as the People's Food System. Offering employment to recently paroled prisoners and refugees from Central America, the system put its values into practice internally and externally. Campaigns to improve nutrition and to understand better the politics of international food industries, as well as the politics of various food choices, helped seed consumer demand for healthier and more organic alternatives, later widely adopted by mainstream grocery stores and restaurants.

[Pam Peirce, one of the activists involved, has given an interview and written an essay on the topic.]

This is just one example of how the mix of Nature, community, and technology steadily transformed throughout the 1970's.

The United States has a history of people forming "utopian communities", and the sociologist Rosabeth Kanter released a book in 1972 that analyzed why some of the longest lasting ones had survived. That same year, the historian Christopher Hill released the book, The World Turned Upside Down: Radical Ideas During the English Revolution, which explored some of the movements that had influenced them. These kinds of ideas were given new expression as the Counterculture began to more thoroughly seep into academia throughout the world. Scientists and scholars had become activists, committed to creation rather than destruction as a form of "direct action". To give a few more examples:

The Biotechnic Research and Development (BRAD) group made a hippie-like commune to develop the "appropriate technology" concepts of E. F. Schumacher. Appropriate technology encourages the use of small-scale devices that take into account the impact that they have on the environments of which they are a part. The New Alchemy Institute (NAI) did similar research into ecology and sustainable design, publishing it within journals adorned with psychdelic-looking sacred art. Judson Jerome, a professor of poetry at Antioch College, even got a grant to visit and systematically study some of the communities that existed at the time. This research culminated in his 1974 book, Families of Eden: Communes and The New Anarchism. Appropriate technology groups would exist all over the world by the end of the decade.

Ralph Scott was a student of the visionary architect Buckminster Fuller. In 1970, Ralph leased a warehouse in San Francisco with the intention of making a sort of "technological commune". It eventually became known as Project One, a collective of teachers, artists, engineers, activists, and people of many different interests who genuinely wanted to share information and tools. Lee Felsenstein was part of a subgroup named Resource One.

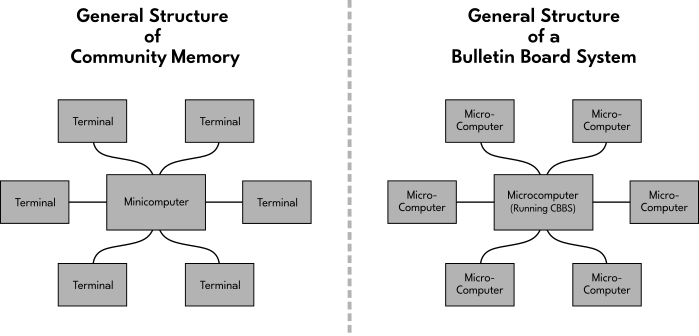

1973 was a fruitful year. Resource One got ahold of one of the computers that was used within "The Mother of All Demos". They set it up to do time-sharing with everyone in the neighborhood through a small handful of terminals. One was placed within a local record shop, another in a city library, and more were added at other locations as time went on. Anyone could read messages stored on the computer for free, or write a message to it for 25 cents. It functioned something like a public bulletin board, with people using it for a variety of different reasons. Resource One called this system Community Memory, and they learned a lot of lessons about social organization through it. To quote [*with an extra link added by me]:

Since the terminal was many people's first introduction to the computer, someone from the group sat next to the terminal to explain how to use it. They were unsure if they would have to dispel notions of centralized authority that were commonly associated with computers at the time. "While we had expected a certain amount of wariness or outright hostility, we found almost universal enthusiasm," wrote Felsenstein. The terminal took on a life of its own beyond the group's expectations that it would just be used to discuss housing, jobs, and cars. Musicians broadly embraced the system and music quickly became the most discussed topic. People began using the terminal for writing poetry, creating typewriter graphics, and posting about subjects ranging from art, politics, women's advocacy, dreams, and in one specific instance, where to get a decent bagel in the Bay Area. In response, a local baker offered their phone number and free lessons for how to make bagels. This validated the group's hopes for informal learning exchanges as written about by Ivan Illich in Deschooling Society [...] the content was shaped entirely by and synchronous to the community...

The book Tools for Conviviality by Ivan Illich was also published in 1973. The general premise of this book is that "tools" of all kinds, which includes how our organizations are structured, can become opposed to their constructive purposes to the point that life is undermined by them. To be "convivial" is to be friendly with one another. The author uses this term to describe a way of thinking that considers the impact that our tools have on each other and how we might intentionally transform them to be of benefit to everyone. [It is a relatively short book that would probably make a good selection for the book club.]

Lee's father gave him a copy of this book and it inspired him to apply his electrical engineering skills towards the making of "convivial" tools. For example, the equipment needed to run Community Memory was very expensive, so he tried to make it cheaper and more accessible to people. He designed a device known as the "Pennywhistle Modem" that same year, and came up with an idea for a "Tom Swift Terminal" the following year.

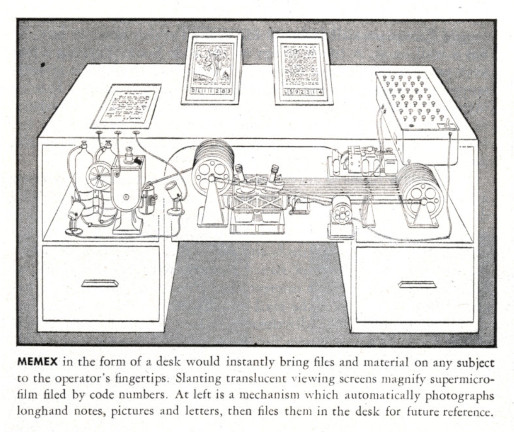

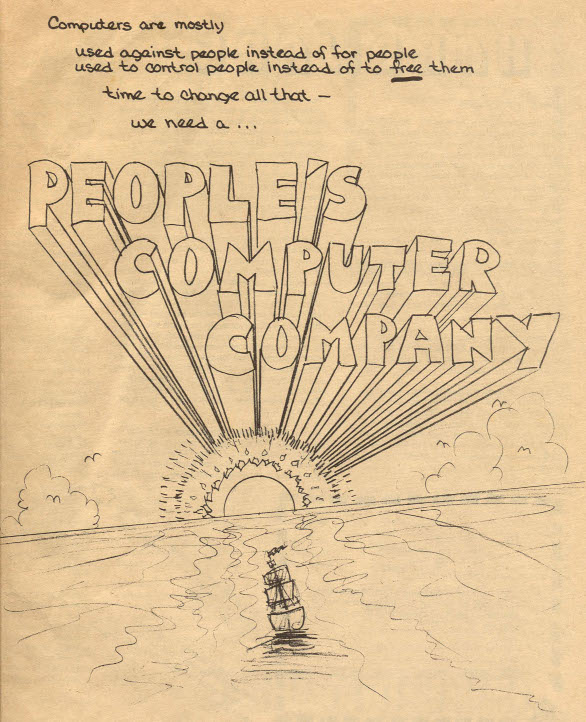

Meanwhile, another organization that helped bring computers into public awareness was the People's Computing Company (PCC). It was founded in late 1972 by Bob Albrecht, Dennis Allison, George Firedrake, and several others. Their newsletter was also published by The Portola Insitute and modelled after the Whole Earth Catalog. Through it, they tried to spread computer literacy (e.g.: by playfully teaching everyone how to use BASIC). The introduction on the front cover of the very first issue encapsulates this social purpose well:

[Image taken from previous link. To reiterate the text on it: "Computers are mostly used against people instead of for people, used to control people instead of to free them. Time to change all that - we need a People's Computer Company." Indeed, what good is technology that enslaves rather than empowers?]

The People's Computing Company also started the Community Computer Center in Menlo Park. Similar to Resource One, they got ahold of a computer that they wanted to share with the public. They would have potluck dinners and teach people how to program by making video games on it for a fee of approximately 50 cents.

In 1974, another computer enthusiast named Ted Nelson published Computer Lib / Dream Machines. Like the PCC Newsletter, this book was also stylized after the Whole Earth Catalog and attempted to foster computer literacy by demystifying their operation. Large portions of the book cover the historical development and social implications of computing. Reading it is like looking into a time capsule. Computers were still incredibly large and expensive, outside the reach of most people. But all of that was about to change...

Micro Instrumentation and Telemetry Systems (MITS) was a company that was founded in late 1969 by Ed Roberts and Forrest Mims III after they had both left the U.S. Air Force. Ed was a computer engineer and Forrest is an amateur scientist. Like the chemistry set of previous generations, kits for experiments in electronics were wildly popular. MITS originally sold kits for building radio transmitters to track how high model rockets flew. That kind of tracking is known as "radio telemetry", hence the "T" in the name "MITS".

By 1974, MITS had developed a kit for building a small computer that they called the "Altair 8800". Finally, there existed a device that was affordable enough for some computer hobbyists to own! Some of them were shocked when it showed up on the cover of Popular Electronics magazine (January 1975):

[Image taken from previous link.]

One of the first Altair 8800 kits was given to the People's Computer Company for review. It ended up within the garage of Gordon French, a computer engineer. At the Community Computer Center, he had met an activist named Fred Moore who was passionate about using computers for political organizing. Together, they founded The Homebrew Computer Club. The term "homebrew" refers to making things at home. In this case, it was "microcomputers" (also known as "home computers").

They invited others to come and take a look at the Altair 8800 as it sat within Gordon's garage. People immediately tried to extend its capabilities using the data sheets that were handed out during that very first meeting on March 5, 1975. Afterwards, Fred created a newsletter for the group and it started to expand.

Eventually, meetings were held in an auditorium that was part of the Stanford Linear Accelerator Center (SLAC), also in Menlo Park. Electronics hobbyists and researchers from nearby labs would dialogue about computers while Lee Felsenstein would moderate. Then, they would all meet outside to share "computer hardware" (i.e.: the physical parts, like electronic circuits). Some had already built their own computers from scratch and inspired others to do the same. Schematics and lists of resources were printed within the newsletter to make it easy for anyone to do.

For example, in 1976, an engineer named Steve Wozniak demonstrated his Apple I home computer to the club. The Processor Technology company also released the Sol-20 microcomputer, which was based off of Lee's earlier Tom Swift Terminal design.

In this same year, Dr. Dobb's Journal was published, a spin-off of the PCC newsletter that focused specifically on the programming of microcomputers. Printed on many of its pages were pieces of "source code" (i.e.: the step-by-step instructions that make up a program, which people can understand and modify for their own purposes). The late 1970's saw a plethora of popular magazines being published that were wholly dedicated to the microcomputer (such as Creative Computing, Byte, and Compute!). Both the creation and programming of microcomputers was growing rapidly.

It cannot be emphasized enough how much the Do-It-Yourself (DIY) approach of home computing was facilitated by freely sharing schematics and source code, as well as the easy availability of electronic components that did not require sophisticated tools to assemble. Inventions of all kinds are always built upon shared resources, which includes the accumulation of insights that others have made throughout history.

As a brief aside, we would like to emphasize two important points here:

1. Many companies grew out of The Homebrew Computing Club, one of the most well-known being Apple Inc. We might even go so far as to say that this collection of events strongly encouraged the mass production of microcomputers, and thus led to the ubiquity of computers within our day-to-day lives at the present moment. It literally started with passionate hobbyists and expanded as their Connection and Collaboration increased.

2. One might have also noticed that much of the above took place in and around a relatively small geographic area, in this case, the San Francisco Bay Area. Nowadays, the city of San Francisco is filled with severe poverty, drug use, and violence [Content Warning]. There are many complex reasons as to why that is the case, but a significant factor is behavior based upon Commerce and Control, especially on part of many people within the "tech companies" that operate there. To quote [*with a couple of extra links added by me]:

Lydia Bransten, Director of The Gubbio Project: "You have companies that used to have four buildings in San Francisco that now have one floor, so all of the infrastructure that has been built for this height is now sort of collapsing. And then to say that the reason San Francisco isn't doing well is because of poor people living on the street who use drugs, it's not real. It's a red herring. We just did a street assessment here in The Mission and 73% of the people that we spoke to were previously employed and housed. Where were they working? They were working in janitorial jobs in those office buildings. All of those businesses have changed their modality, especially those who talk about downtown being closed. Well, downtown used to employ hundreds, thousands of people who were working in low-level, entry-level jobs, as janitors, as all of the things that supported that business. Now, there is no one in those buildings."

Narrator: "Much like the gold tycoons who pillaged the West for its resources centuries ago only to leave behind ghost towns that mark the wild west, big tech has essentially used San Francisco as a playground for over two decades and now has left behind an over priced shell of a once-great American metropolis. Anyways, the story of corporate greed is typically left out of the San Francisco conversation. All you seem to hear about on TV is that San Francisco is an apocalyptic third-world wasteland..."

Notice how points #1 and #2 interrelate. Does Connection and Collaboration degrade into Commerce and Control when people are objectified? To continue...

Two Subcultures were steadily growing throughout the 60's and 70's. Inside of academia, there was the Tech Model Railroad Club (TMRC) that started at MIT in the mid-1940's. TMRC members would salvage "relays" (mechanical switches that can be controlled with electricity) from the local telephone company. Then, they would use them to create complex electromechanical circuits to control the movement of their model trains along their tracks.

When MIT began to house more computers, the same kind of technically-inclined young people made some of the first computer games. They eventually became a group called Project MAC (short for Mathematics and Computation) in 1963. Project MAC helped to create the Multics "operating system", a set of programs that allow the hardware and software of a computer to work together. A group also split off to program the playfully named Incompatible Time-sharing System (ITS), which helped further the use of time-sharing in collaborative computer work.

When they weren't obsessing over computer technology, they explored the restricted areas of campus and played good-natured pranks on their fellow nerds. This pastime, of finding clever ways of doing things, was informally referred to as "hacking". The ones who did it were known as "hackers". The hacker Subculture had its own slang and its own ethic. To quote:

1. "Access to computers - and anything which might teach you something about the way the world works - should be unlimited and total. Always yield to the Hands-On Imperative!"

2. "All information should be free."

3. "Mistrust authority - promote decentralization."

4. "Hackers should be judged by their hacking, not bogus criteria such as degrees, age, race, or position."

5. "You can create art and beauty on a computer."

6. "Computers can change your life for the better."

As the previous link summarizes: Hacking revolved around the principles of sharing, openness, decentralization, free access to computers, and using information and technology to increase democracy and improve the world.

Outside of academia, groups of young people were striving to understand how the telephone system worked out of a genuine sense of curiosity. They were called "phone freaks" (or "phreaks", for short). Their activities were known as "phreaking". At the time, telephones were interconnected by a complex set of relays that communicated with one another through audio frequencies. A sound or "tone" travelling down the phone line would determine where and how a phone call would connect.

Some of the earliest phreaks were people like Josef Engressia Jr. (known by the alias "Joybubbles") and Ralph Barclay. Through personal experimentation, pouring over technical journals, and other ways of gaining information about the phone system, they learned how to use these audio frequencies to control the relays. This knowledge enabled one to make free long-distance phone calls and have teleconferences on "test lines" that were not meant to be accessible to anyone but the "linesmen" who set up the phone network. [A good resource on this history is Exploding The Phone by Phil Lapsley, author of the website The History of Phone Phreaking.]

Phreaking saw a huge increase in popularity in 1971 thanks to the Youth International Party (also known as the "Yippies"). Despite their name, the Yippies were not a political party, but a group of hippie activists that wanted to completely transform society by building a "New Nation". To quote [*including all of the extra explanatory links of the original, as well as another added by me]:

The Yippie "New Nation" concept called for the creation of alternative, counterculture institutions:

food co-ops;

underground newspapers and zines;

free clinics and support groups;

artist collectives;

potlatches, "swap-meets" and free stores;

organic farming / permaculture;

pirate radio, bootleg recording and public-access television;

squatting;

free schools;

etc.

Yippies believed these cooperative institutions and a radicalized hippie culture would spread until they supplanted the existing system. Many of these ideas / practices came from other (overlapping and intermingling) counter-cultural groups such as the Diggers, the San Francisco Mime Troupe, the Merry Pranksters / Deadheads, the Hog Farm, the Rainbow Family, the Esalen Institute, the Peace and Freedom Party, the White Panther Party and The Farm. There was much overlap, social interaction and cross-pollination within these groups and the Yippies, so there was much crossover membership, as well as similar influences and intentions.

"We are a people. We are a new nation," YIP's New Nation Statement said of the burgeoning hippie movement. "We want everyone to control their own life and to care for one another ... We cannot tolerate attitudes, institutions, and machines whose purpose is the destruction of life, the accumulation of profit."

The goal was a decentralized, collective, anarchistic nation rooted in the borderless hippie counterculture and its communal ethos...

They started the Youth International Party Line (YIPL) in July of that year. YIPL was an underground newsletter that saw phreaking as a way to make a political statement. But the potential of using that knowledge to commit crimes also hit the Mainstream through the article Secrets of the Little Blue Box by Ron Rosenbaum, published in the October issue of Esquire. The following year, a political magazine called Ramparts published a how-to article that was confiscated by police and telephone security personnel. That type of activity would only intensify in the years to come...

[Taken from YIPL Issue #2, July 1971. This is an interesting letter by Abbie Hoffman, one of the co-founders of the YIP, to someone who read his book and criticized his suggestions of using free phone calls as an act of protest. While we differ in our approach towards achieving it, I am particularly fond of the following quote: "As the level of technological development increases, the costs should decrease with the goal being to make everything produced in a society free to all the people, come who may. Neat, huh?" Yes! Let's make things that serve everyone and share them freely, rather than try to take control of what already exists by force. Manipulation and fighting will naturally fall away.]

Telephones and computers have a long history together. The scientists at Bell Labs, the research division of the massive American Telegraph & Telephone Company (or AT&T), had a hand in many projects related to computing. For example, they helped to develop the "modulator-demodulator" (more commonly known as the "modem"). This device would turn the electrical pulses used inside of computers (a "digital" signal) into the audio frequences used on the telephone network (an "analog" signal), and vice versa. This would allow computers to communicate with one another over vast distances through the telephone lines. Therefore, the terms "hacking" and "phreaking" became nearly synonymous with one another as groups of hackers and phreakers started to converge.

The programmers at Bell Labs also worked on Multics with Project MAC before leaving to produce another operating system called Unix. One aspect of Unix is UUCP (or "Unix-to-Unix Copy"). It allows two computers that are both running Unix to share files, thus forming a simple computer network. In 1979, Tom Truscott and Jim Ellis were students at Duke University in Durham, North Carolina. They used UUCP to talk to Steve Bellovin, a student at UNC, another college about 9 miles away in Chapel Hill.

Along with another Duke University student named Stephen Daniel, they made the software that would become the foundation for The User's Network (or Usenet), a way for people across a computer network to converse about different subjects together. These dialogues are referred to as "newsgroups". We might think of Usenet as a precursor to what we now call an "Internet forum", but one could only access it if their local college campus had a minicomputer that was part of the network.

A similar system called BITNET would be formed in 1981, co-created by Ira Fuchs at the City University of New York (CUNY) and Greydon Freeman at Yale University in New Haven, Connecticut. Academic computer networks started to proliferate.

In the early 1980's, computers were starting to become a regular fixture of daily life for people who were not computer specialists. From the outset, there were concerns about overdependence on technology, loss of jobs through automation, violations of privacy by using computers for government surveillance, and so on. [Some of those problems are captured within the 1983 book, The Rise of the Computer State by David Burnham.] But there was also some degree of hope that computers could still be used to empower both individuals and groups, so long as everyone was computer literate.

Seymour Papert, a researcher at MIT, helped to design an educational programming language called Logo during the late 1960's. By 1980, he had published a brilliant book entitled Mindstorms: Children, Computers, and Powerful Ideas, which describes how people of all ages can learn complex concepts if they are presented in a manner that meshes with how we naturally learn. He also demonstrated how computers could be a helpful tool for doing this by providing a context that allows one to play around with concepts in order to understand how they work.

Microcomputers steadily trickled into public schools and libraries through programs like ComputerTown, started by PCC in 1981. They even put together a book on how to make your own "ComputerTown". [One of the co-authors of this book, Liza Loop, started the non-profit LO*OP Center in 1975. It houses the History of Computing in Learning and Education (HCLE) collection. More recently, Liza has given several retrospective talks on how the introduction of microcomputers affected teaching/learning and how computer literacy has changed over time.]

Similar events were unfolding within the United Kingdom. For example, at the end of 1981, the British Broadcasting Corporation (BBC) worked with Acorn Computers to make the BBC Micro as part of their Computer Literacy Project. The next year, the BBC aired a television series named The Computer Programme that showed how to use it. They also made an accompanying book, The Beginner's Guide to Computers by Robin Bradbeer, Peter De Bono, and Peter Laurie.

1982 was a watershed for home computers. Many companies rushed to join what the media referred to as the "microcomputer revolution". The ZX Spectrum was released by Sinclair Research in April, the Dragon 32 was released by Dragon Data in August, and the Oric-1 was released by Tangerine Computer Systems in September. "The 1977 Trinity" was a similar wave of microcomputer releases that occurred within the United States a few years before. It included the PET by Commodore International, the apple ][ by Apple Inc., and the TRS-80 by Tandy Corporation. It was followed by the Atari 8-bit family a couple of years later. The same companies began to quickly iterate designs (e.g.: the VIC-20 released in 1980-81 and the Commodore 64 released in 1982).

The only problem: There was little hardware or software compatibility across all of these different microcomputers! Each company had its own line of products, and a program that ran on one computer would not necessarily work on another.

One microcomputer that had a huge impact on the situation was the Personal Computer (or PC). It was released by International Business Machines (more commonly known as IBM) in August of 1981. As their name implies, IBM mainly sold computers to other businesses up until this point in time. But larger corporations could no longer afford to ignore what all of the computer hobbyists were doing and started to emulate their methods.

The IBM PC was designed with an "open architecture", meaning that parts were "standardized" (or made to specific specifications) to allow them to be easily swapped out with "off-the-shelf" components (i.e.: what one could get at the average electronics supplier). While this made it fast and cheap to produce for IBM, it also made it easy to "clone". A clone is a computer that could use whatever was made for the IBM PC, but that was available at a much lower price point. When IBM tried to push for a different standard to try to regain dominance, the companies that were producing clones banded together to try to keep the architecture open. Thus, the term "Personal Computer" became almost synonymous with microcomputers in general as many manufacturers switched over to that architecture.

A variety of "user groups" were formed to help people figure out how to use all of this new technology. Some of them were put together by hobbyists and others by computer companies to explain their products. More "homebrew" groups appeared all over the world too, such as the Chaos Computer Club (CCC) in Germany, which was established in 1981. The CCC has held an annual conference about computer-related subjects, the Chaos Communication Congress, since 1984.

Unfortunately, "materialism" (as a general attitude, not a specific philosophy) reached a climax within the 1980's as well. To quote:

The 1980s have been called the decade of materialism in the United States. However, the U.S. did not suddenly emerge as a material culture; the 1980s were a culmination of decades of materialistic pursuit. As early as the first half of the nineteenth century, de Tocqueville commented on Americans' avid pursuit of material well-being. Contemporary social observers describe a similar state of affairs.

The signs of materialism include self-absorption to the exclusion of others and a desire for immediate gratification. In addition, possession comes to be valued over other goals such as personal development, relationships with others, and the work ethic: the ascension of materialism as a central value may shape the nature of other values. Material objects also represent success and status in contemporary U.S. culture. Thus, materialists make use of tangible objects to signify success.

Because material objects have become so important in our society, people tend to amass more and more objects. These accumulations of objects lead to a great deal of "noise" in any attempts to use these objects to communicate. Thus, people need more and stronger signals to break through the noise in order to communicate effectively (or, indeed, at all)...

The first step of objectification is alienation, a separation between people to the point where they can no longer communicate as equals.

As we saw above, "commercialization" was rapidly starting to increase, so computer hardware and software was becoming progressively more "proprietary". In other words, there was a trend towards creating and selling products whose functioning was purposely hidden from whoever was using them, instead of freely sharing schematics and source code.

Worse, during the previous decade, the entire 1960's Counterculture had been steadily "commodified" (i.e.: turned into a product that could be bought and sold). The constructive values that underlie it were completely undermined through advertising, and with its purposes derailed, some of its worthwhile accomplishments were even reversed. [The article The Commodification of Love: Gandhi, King and 1960s Counterculture by Alexander Bacha and Manu Bhagavan provides some particularly lucid examples. It dovetails nicely with the article that we have already posted in this thread, Changing The World Through Love.]

Richard Stallman, a hacker at MIT, attempted to mitigate part of the situation in regards to computers. He started The GNU Project in 1983 with the goal of making a Unix-like operating system. Anyone could have the source code and alter it to do what they needed because it wouldn't be owned by AT&T. This would also make it easier to adapt it for use on different types of computers.

He explained his stance on software at the 1984 Hackers Conference in Sausalito, California (about 4 miles from San Francisco). But by that time, the Hacker Ethic had already started to become distorted within the Mainstream, merging with the business concepts of "entrepreneurship" and "venture capital". So, in 1985, he released The GNU Manifesto to explain the reasoning behind The GNU Project. He also started the non-profit Free Software Foundation (FSF) to help further the philosophy of "free software".

The term "free" here does not mean that it is available without cost, nor does it refer to the fact that its inner workings are "open" (i.e.: available to anyone who wants to understand them). While both of these are important, they are only aspects of a more fundamental issue: how people are treated. Therefore, in this context, "free" means that a system preserves the rights (i.e.: "freedoms") of those who use it. These "four essential freedoms" are:

- The freedom to run the program as you wish, for any purpose (freedom 0).

- The freedom to study how the program works, and change it so it does your computing as you wish (freedom 1). Access to the source code is a precondition for this.

- The freedom to redistribute copies so you can help others (freedom 2).

- The freedom to distribute copies of your modified versions to others (freedom 3). By doing this you can give the whole community a chance to benefit from your changes. Access to the source code is a precondition for this.

The first two protect the individual, while the last two protect the community. They help us to carefully distinguish between when "the users control the program" and when "the program controls the users". The difference between the two situations is enormous.

This philosophy inspired groups of activists who were looking into using computers for the purpose of sharing relevant information internationally, such as the 1984 Network Liberty Alliance.

Along with the increasing prevalence of the home computer, modems were also becoming more common throughout the 1980's. This led many people towards starting their own Bulletin Board Systems (or BBS). A BBS is a computer that other people can connect to by dialing a specific phone number. This would allow anyone that was connected to that computer to leave messages or files on it, much like a public bulletin board. The person who operated that computer was called a "system operator" (or sysop, for short).

During a blizzard in the winter of 1978, two programmers named Ward Christensen and Randy Suess wrote one of the first pieces of software that made the BBS possible. It was called the Computerized Bulletin Board System (CBBS). It grew out of experiments by members of an amateur computer group known as the Chicago Area Computer Hobbyists' Exchange (CACHE). They wanted to share newsletters with each other, so Randy and Ward created CBBS as a means of doing that.

Each BBS functioned a lot like Community Memory, but in miniature. Instead of many terminals connected directly to a single minicomputer, there were many microcomputers connected through their modems to a single microcomputer running BBS software.

In 1984, another computer programmer named Tom Jennings tried to come up with a way to share messages with two other BBS system operators, John Madill and Ben Baker. This resulted in FidoNet, a piece of software that allowed one to transmit information across BBS systems in order to create a network of multiple BBS. In 1986, a BBS system operator named Jeff Rush programmed EchoMail, which allowed FidoNet to work in a manner similar to Usenet.

The type of conversations that were only available through large academic computer networks like Usenet had become accessible to the individual computer hobbyist in less than a decade!

There were BBS for a wide variety of different interests. One particular type of neighborhood-run BBS was known as a "free-net". To quote:

A free-net was originally a computer system or network that provided public access to digital resources and community information, including personal communications, through modem dialup via the public switched telephone network. The concept originated in the health sciences to provide online help for medical patients. With the development of the Internet, free-net systems became the first to offer limited Internet access to the general public to support the non-profit community work...

Dr. Tom Grunder started St. Silicon's Hospital and Information Dispensary in 1984 in order to share medical information with the public on behalf of the Department of Family Medicine at Case Western Reserve University (CWRU). By 1986, it had transformed into The Cleveland Free-Net, one of the first of many free-nets around the world.

[This history is captured well in the eight-part series, BBS. If you are interested in trying to connect to the BBS that still exist, check out the series Back to the BBS.]

Generally, "virtual communities" of all kinds were starting to gain momentum. For example, Stuart Brand and Larry Brilliant created The Whole Earth 'Lectronic Link (The WELL) in 1985. Many of the conversations there revolved around practicing self-governance within the emerging concept of "cyberspace".

On the technical end, The WELL uses a piece of software called PicoSpan. It allows one to make or join public and private "conferences" (i.e.: text-based dialogues with a group of other members). On the social end, access to The WELL is subscription-based and everyone uses their actual names. Those two features were intended to encourage self-moderation. There was also a rule known as "You Own Your Own Words" (YOYOW) that members would agree to follow upon signing up. This meant that each person would take responsibility for what they said (including any legal ramifications). However, some interpreted it as meaning that they owned the copyright to whatever they posted and that it couldn't be shared elsewhere without their consent.

[The book, The Virtual Community by Howard Rheingold, details his personal experiences with The WELL and some of the history behind virtual communities in general. He has also written the wonderful book, Tools for Thought, which covers the development of computers.]

Around this same time, the destructive potential of hacking/phreaking was thrust into the Mainstream again through movies like 1983's WarGames. There was also the rise of "cyberpunk", a sub-genre of science fiction that deals with dystopian technology. A well-known example is the 1984 novel, Neuromancer by William Gibson. However, these sorts of themes were foreshadowed within previous decades, like in The Shockwave Rider by John Brunner (published in 1975) and Colossus by D. F. Jones (published in 1966). It might seem strange to mention works of fiction, but life often imitates art, and vice versa.

[A screenshot from the movie Wargames. If one's first inclination after watching this movie is to try to gain access to a government computer, then I think they've missed the point.]

In summary: The 1980's seemed to be marked by both a distrust of kids with computers and a rush to put as many computers as possible within their reach. Simultaneously, an entire scene of hackers/phreakers that were critical of "authority" was flourishing on international BBS and written about in magazines like 2600. [A couple of interesting examples of hacking during this era are:

- Out of the Inner Circle by Bill Landreth

- Underground by Suelette Dreyfus and Julian Assange (with a corresponding documentary, In the Realm of the Hackers)]

Businesses and government institutions scrambled to find ways to protect their relatively new computer networks. The term "hacker" started to take on a negative connotation, especially as some got ahold of "secret" information and tried to sell it. To differentiate between the motivations of individuals, some used the term "cracker" for those who gained access to a computer network in order to commit crimes or to terrorize others, and the term "hacker" for one who accessed a computer network only for the sake of learning and exploring.

That dichotomy is captured well by the famous essay, The Conscience of a Hacker (sometimes known as The Hacker Manifesto). This essay was written in 1986 by Loyd Blankenship (who went by the alias "The Mentor"). It was first published within an underground hacking magazine called Phrack. To quote:

[...] We make use of a service already existing without paying for what could be dirt-cheap if it wasn't run by profiteering gluttons, and you call us criminals. We explore... and you call us criminals. We seek after knowledge... and you call us criminals. We exist without skin color, without nationality, without religious bias... and you call us criminals. You build atomic bombs, you wage wars, you murder, cheat, and lie to us and try to make us believe it's for our own good, yet we're the criminals.

Yes, I am a criminal. My crime is that of curiosity. My crime is that of judging people by what they say and think, not what they look like. [...]

That might sound a bit dramatic, but one must also keep in mind the historical context in which it was arising. There was a growing distrust of the government within the early 1970's because of the release of "The Pentagon Papers", evidence that the military presence within Vietnam increased despite the lack of public support for the war there. There was also the Watergate Scandal, discovery of illegal espionage and sabotage being carried out by the president against his political rivals. Both of those events had prominent features within The New York Times and The Washington Post, respectively.

Vast amounts of government corruption were also uncovered in the late 1970's through The Church Committee. Some examples of what was found [Content Warning]:

- People within the Central Intelligence Agency (CIA) were carrying out some very sadistic human experimentation programs through Project MKUltra.

- People within the Federal Bureau of Investigation (FBI) were involved in the assassination of activists through the Counter Intelligence Program (or COINTELPRO).

- People within the National Security Agency (NSA) were spying on everyone's telecommunications without any warrants through Project SHAMROCK.

And that is on top of the corporate co-opting of computing, and business-induced ecological problems like the Three Mile Island incident in 1979. Again, we see Commerce and Control creating severe problems for humanity as a whole. All of that would set the tone for the end of the decade and the beginning of the next...

In 1989, Richard Stallman released the GNU General Public License (GPL), a legal framework that would help keep software free. Anytime someone altered the source code to a program that had the GNU GPL, they would have to share it under the same terms.

Much of the creation of GNU was thanks to many hardworking volunteers who wanted everyone to benefit from it. We might refer to this process as "mass collaboration", and it may or may not necessarily involve the exchange of money for programming. Michael Tiemann, David Henkel-Wallace, and John Gilmore also formed a company called Cignus Solutions in 1989 to financially support the development of free software.

In 1990, the United States Secret Service carried out Operation Sundevil, an attempt to break up the hacking/phreaking communities existing throughout the United States. The computers behind twenty-five different BBS were seized and three people were arrested.

John Perry Barlow and Mitch Kapor, along with John Glimore and several others, founded the Electronic Frontier Foundation (EFF) in response to some of the court cases that resulted from Operation Sundevil. It quickly became apparent that many people within law enforcement lacked computer literacy, and crimes related to information technology were relatively new, so detailed laws for handling them did not exist. Those involved with the EFF wanted to make sure that people's constitutional rights (i.e.: those within The Bill of Rights) were not being violated.

[The book The Hacker Crackdown by Bruce Sterling provides a humorous and insightful explanation of these events.]

By the late 1980's, one of the final pieces of software needed to make GNU into a full operating system was a specific part known as the "kernel". Linus Torvalds, a student at the University of Helsinki in Finland, started a personal project to create a kernel in 1991. He was inspired by Minix, an operating system based on Unix that was intended for students to learn about how operating systems work.

In October of that same year, Linus had released a workable version of the kernel. People throughout the free software community, as well as those who were interested in Minix, helped to develop it. It was eventually called "Linux" (which could be intepreted as short for "Linus' Unix", though this was not the original name).

Linus released it under the GPLv2 license in 1992. Many people combined it with various programs from GNU to make a full operating system. These "distributions" came to be generally known as "Linux". Some of the first were Slackware and Debian, both released in 1993. [Nowadays, one can easily download and install a GNU/Linux distribution for themselves.]

Meanwhile, throughout the 70's and 80's, several programmers in the Computer Systems Research Group (CSRG) at Berkeley were extending the functionality of Unix. In order to use Unix at the time, one had to pay a very expensive licensing fee to AT&T, but they also got source code that they could modify to suit their own needs.

Keith Bostic and several other members of the CSRG tried to systematically remove or replace the parts of the source code that were owned by AT&T, so that other academic researchers could use the software on their own computer networks. This was generally known as the Berkely Software Distribution (BSD). The first version, "Networking Release 1" (Net/1), came out in 1989. The second version, "Networking Release 2" (Net/2), came out in 1991.

A fully-fledged, free operating system for microcomputers was made out of Net/2 by the couple Lynne and William Jolitz. It was called 386BSD. Both Lynne and William had studied computer science at Berkeley and worked extensively with BSD. They described the process of making 386BSD in a series of articles published within Dr. Dobb's Journal, and wrote a textbook on how the kernel works a few years later.

386BSD was released in 1992, and it eventually became the basis for FreeBSD and NetBSD, both released in 1993. Between the various "flavors" of GNU/Linux and BSD, many computers throughout the world started using free Unix-like operating systems.

Another aspect of programming that was coming to a head around this time was the use of software for "cryptography". Cryptography (literally "hidden writing") is the study of how to send and receive secret messages, keeping information "private" by sharing it only between specific parties. The less affected those messages are from tampering of some kind, the more "secure" that system of communication. Government communications often make use of cryptography. To give a simple description of how it works:

A "cyphertext" is a secret code, a message that cannot be easily interpreted because the information that it contains is scrambled up in some way. Inversely, "plaintext" is something that is readily readable. "Encrypting" data consists of turning a plaintext into a cyphertext, while "decrypting" data is the opposite. Both encryption and decryption are done with a "key", some means of turning one type of data into another. Generally, the larger the key, the harder it is to decrypt something without it.

A series of mathematical discoveries, software techniques, and hardware developments over the previous two decades had made it easier for the average person to encrypt and decrypt data using a microcomputer. This started with the creation of the Data Encryption Standard (DES) in 1973. DES demonstrated a general process for using a computer to encrypt data.

It was followed up by the Diffie-Hellman Key Exchange described within the 1976 paper, New Directions in Cryptography by the mathematicians Whitfield Diffie and Martin Hellman. This allows two people to securely share information by using a series of different keys to identify one another.

It was evident that individuals were becoming increasingly connected together into a "global village" (or in economic terms, a "network society"). There was a hope that the existence of a worldwide computer network would free everyone from oppressive governments by allowing people to interact directly. Many tried to use ideas from cryptography to make that happen.

One example is David Chaum's 1985 article, Security without Identification: Card Computers to make Big Brother Obsolete. The social implications of these types of systems started to become more apparent by the end of the 1980's. A good summary is Timothy May's Crypto-Anarchist Manifesto from 1988. To quote:

Computer technology is on the verge of providing the ability for individuals and groups to communicate and interact with each other in a totally anonymous manner. Two persons may exchange messages, conduct business, and negotiate electronic contracts without ever knowing the True Name, or legal identity, of the other. Interactions over networks will be untraceable, [...] Reputations will be of central importance, far more important in dealings than even the credit ratings of today. These developments will alter completely the nature of government regulation, the ability to tax and control economic interactions, the ability to keep information secret, and will even alter the nature of trust and reputation.

Using cryptography to circumvent government control was known as "crypto-anarchy", and one who attempted to program the software to do it was called a "cypherpunk". Two of the main goals of the cypherpunks were:

1. Freedom from government surveillance by making systems that allowed individuals to communicate privately

One example of this was the work of Phil Zimmerman, a peace activist who created a program called Pretty Good Privacy (PGP) in 1991. PGP allowed people to encrypt their own data with cryptography that was much more secure than anything else easily available to the public at the time. Phil gave PGP away for free and had its source code published in book form when it seemed as if the government was going to suppress it.

While some warned that making strong encryption widely available would protect the communications of terrorists, drug/weapon/human traffickers, and so on, Phil created PGP with the intention of protecting activists from surveillance by despotic governments. International anti-war protests increased sharply during the 1980's because of wars occurring within Central America and the corruption uncovered during The Iran-Contra Affair [Content Warning]. There were also many nuclear disarmament demonstrations, such as peace camps and marches, because of the tensions of the "Cold War". A couple of well-known protests around that time were the Greenham Common Women's Peace Camp held in England during 1981, and the Great Peace March for Global Nuclear Disarmament held in the United States during 1986.

2. Providing systems that could be controlled by individuals as alternatives to governments overcome by corruption (e.g.: the creation of "money" by a central bank)

The article The Cypherpunks by Haseeb Qureshi gives an excellent description of the reasoning behind the above example:

It's important to understand the cypherpunk take on economic philosophy. The cypherpunks were deeply suspicious of central banks and their control over monetary policy after the end of Bretton Woods. Many years later, their suspicions were arguably justified after the financial crisis of 2008, when central banks created massive amounts of money to bail out failing banks.

It's worth taking a brief excursion here. In general, governments have two ways to finance their operations.

The first is taxation, where a government directly transfers money from citizens into its coffers. The second way is by printing money, traditionally known as seigniorage, which also transfers money to the government, but is a little more subtle to analyze. When a government prints money, the government obviously acquires currency, but citizens often find that the value of their currency holdings has depreciated, since there's now more money chasing the same set of real assets.

Taxation and seigniorage are roughly economically equivalent, but taxation generally requires the assent of citizens, whereas printing money can be done unilaterally. The cypherpunks thus saw money printing as a form of theft from the holders of currency.

The cypherpunks believed that to have a form of money truly native to cyberspace, it would have to be free from government intervention. After all, the Internet itself was already borderless and international! An Internet-native currency ought to put everyone, regardless of nationality, on a level playing field. Tying a digital economy to a singular fiat currency would subjugate it to the whims of a single country's central bank.

Furthermore, such a system should not have a central party capable of surveilling it. Otherwise that central party would be tempted to censor the system or manipulate the currency. After witnessing the many financial crises and hyperinflations in the 20th century, the cypherpunks believed that the soundest economic system was one that no one could manipulate.

As those kinds of issues became an ever growing concern, more people began to converse about them openly. For example, the Computer Professionals for Social Responsibility (CPSR) alliance sponsored the first Computers, Freedom, and Privacy (CFP) Conference in 1991 at Burlingame, California (around 14 miles away from San Francisco).

John Gilmore, Timothy May, and Eric Hughes formed the Cypherpunk Mailing List in 1992 to further develop cryptographic techniques and the philosophy behind their use, as well as to make predictions of their impact on society. This led to documents like Eric's Cypherpunk Manifesto in 1993 and Tim's Cyphernomicon in 1994. Meetings were also held at the Cignus Solutions offices in Mountain View, California (around 38 miles from San Francisco).

Some people within the United States government wanted to keep cryptography limited to government use or restrict the public's access to it in some way. Since WWII, cryptography was considered a type of "munition" (i.e.: a weapon) that could not be exported to other countries. They also argued that law enforcement should be allowed to break encryption by building a "backdoor" into every electronic device as a matter of "national security".

Meanwhile, the cypherpunks saw access to cryptography as a human right and argued that sharing source code is a type of "free speech", including software for cryptography. They started sharing cryptographic information far and wide. Several organizations, like the EFF and the Electronic Privacy Information Center (EPIC), also became involved in court cases and protests against the U.S. government as a result.

Those events were informally referred to as the "Crypto Wars". [A couple of great documentaries exploring the different goals of the cypherpunks and related groups are NHK's Crypto Wars: America and ReasonTV's Cypherpunks Write Code.]

The idea of computer security (i.e.: "cybersecurity") grew rapidly as the 90's continued. Hacking conferences similar to the Chaos Communication Congress started to proliferate.

Some of the first within the United States were Summercon started in 1987 by Phrack magazine, and HoHoCon started in 1990 by a hacking crew known as the Cult of the Dead Cow (cDc).

DEF CON was started in 1993 by Jeff Moss (who goes by the alias "Dark Tangent"). Hackers On Planet Earth (HOPE) was started in 1994 by Eric Corely (who goes by the alias "Emmanuel Goldstein"). The latter is also co-founder of 2600 magazine, along with David Ruderman.

The crowds of such conferences would eventually be made up of a wide spectrum of people, from part-time hobbyists to full-time government agents, with employees of various computer and information-related businesses everywhere in-between. The language used to describe hacking started to transform again as well. One "wore" a different colored "hat" depending on the nature of their hacking:

- A "white hat" symbolizes someone who breaks into a computer network with the permission of the "owner" of said network. This is known as "penetration testing" (or pentesting, for short). Nowadays, some might also use the term "ethical hacking". When it is done as part of a group, it is called "red teaming". Many companies and governments are happy to hire such people to secure their systems.

- A "black hat" is synonymous with the term "cracker", someone who breaks into computer networks without permission (gaining "unauthorized access"), usually to commit a crime or do some type of malicious activity. It may include "cybermercinaries" that hack-for-hire (like NSO Group, Dark Basin, Appin Security, etc.).

- A "grey hat" is a person who straddles the boundary between the above two categories.

The Internet of the early-1990s was often likened to the "wild west", and this hat metaphor comes from the genre of Western movies where the "villain" often wears a black cowboy hat, while the "hero" dons a white cowboy hat. Of course, the classification of someone as wearing one hat or another depends on the ethics of the one doing it, as well as the laws of the land in which they are located. Most people do not distinguish between those different categories, defining the word "hacking" as breaking into a computer network.

It seemed as if the hacking of the mid-1980s, which emphasized exploring technology and playing silly pranks, was starting to disappear. The imprisonment of hackers like Kevin Mitnick and Edward Cummings in 1995 are a couple of extreme examples. [Freedom Downtime is a good documentary on these events.]